Mapping the Effectiveness of EIS

Posted

April 25, 2022

Share:

At their core, early intervention systems have similarities with the philosophies that guide personnel development and human resources best practices commonly seen in the private sector. Their goal is to aid in evaluating an officer’s (employee) performance, identifying areas of improvement, and opportunities for support. However, in the case of law enforcement, the stakes are much higher as these performance issues relate directly to critical matters like agency readiness and maintaining the public’s trust.

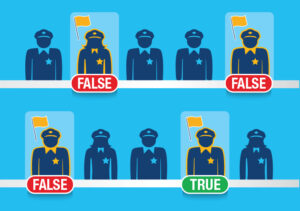

Given that early intervention systems can significantly impact public trust and perception of policing, it stands that they should be held to a more rigorous standard than performance review technology used in much of the private sector. False negatives derived from faulty analysis are potentially costly, contributing to an agency’s exposure to the risk of a lawsuit or civil rights claim. What’s more, failure to provide adequate support in the case of a true positive can have a similar effect. This article explores the intersections of technology and human performance and how their relationship can assist, or hinder, a high-performing early intervention program.

Building the Tech

The first iterations of early intervention systems were relatively primitive compared to some of the more sophisticated systems in use today. Most notably, limitations in tech – processors, and data storage capacity posed challenges to complex and resource-intensive data analysis. These early systems also lacked the benefits of decades of research and on-the-ground experience, which are critical elements of an effective EIS. Given these limitations, most of those systems (and almost all today) relied on rules-based thresholds:

The first iterations of early intervention systems were relatively primitive compared to some of the more sophisticated systems in use today. Most notably, limitations in tech – processors, and data storage capacity posed challenges to complex and resource-intensive data analysis. These early systems also lacked the benefits of decades of research and on-the-ground experience, which are critical elements of an effective EIS. Given these limitations, most of those systems (and almost all today) relied on rules-based thresholds:

- Department-level thresholds: There are standards set at the departmental level—for example, a certain number of complaints in a specified period.

- Performance indicator ratios: As the name implies, these are a ratio between two different performance variables. Shifts worked versus complaints, for example.

- Peer-officer average thresholds: a “like to like” comparison of officers in similar assignments or positions.

In the early 1990s, early intervention systems became increasingly common in best practice recommendations from the major professional and certification organizations. Then starting in 1997, federal consent decrees frequently mandated the use of EIS as a part of broader reform-minded measures. As EIS were more widely deployed, this added a more comprehensive sample size of users that researchers could use to study such a system’s efficacy and identify where they could be improved.

Those studying early intervention systems began to realize that the predictive ability of threshold-based systems was poor. One study found that a threshold-based system deployed in a major metro police department with more than 1,800 sworn officers incorrectly flagged hundreds of officers and failed to identify many others requiring intervention support. Even when additional variables were added to more rudimentary threshold-based systems, evidence showed they generally lacked the contextual analysis needed to perform as well as systems that incorporated predictive modeling. The same study found that when the department deployed a new, modeling-based EIS developed at the University of Chicago and now offered by Benchmark Analytics as First Sign® Early Intervention, there was a 20% reduction in false positives and a 75% increase in true positives, demonstrating the advantages of a more sophisticated, predictive EIS.

The Human Element

Early intervention systems are an excellent tool for supervisors and departmental leaders, but they are still a tool. They can be used to inform personnel decisions but are not intended to be the sole arbiter of these decisions. A well-constructed EIS can point to appropriate and evidence-based interventions, but ultimately that follow-through requires human interaction and support to be effective and lasting. These are a few practical and human factors that can contribute to or detract from an early intervention system’s success:

Accountability: Just as officers are held accountable for their actions on duty, supervisors are ultimately responsible for the quality of support they give to their reports. Interventions triggered by an EIS are almost always confidential as they can involve human resources issues, sometimes concerning mental health or sensitive matters. The private nature of these discussions risks inconsistency in intervention tactics, a major hurdle for effective intervention as it can lead to a perception of favoritism.

Budget and capital constraints: An EIS requires people to perform the intervention whether that is a referral, conversation, or other ongoing support. All of these require time, which has a cost. Agencies with access to more resources typically have more robust mental health and officer support systems. In contrast, agencies with more limited funding need to balance immediate and long-term funding needs. Officer mental health and wellness are essential facets of early intervention, and having the resources to maintain these support programs is a significant advantage in fielding an adequate EIS.

EIS design: Just as personal bias can affect intervention decisions, bias in design can lessen the effectiveness of an EIS. Technology is not inherently neutral and, without careful design, can consciously or unconsciously reflect the biases of its creators. While it is important to note there has been no research to suggest any evidence of this in early intervention systems, basing design decisions on evidence generated from peer-reviewed research can serve as a safeguard against unconscious bias in tech design.

Making the Technology Work

A new era of police reform has increased public interest in early intervention and made it a priority for professional organizations supporting the research and the academics involved in it. Data and experience are driving the newest iterations of these systems, and it is clear from these insights that incorporating research-based design into a predictive rather than threshold-based EIS is the most promising path for effective intervention. In partnership with a diversified research consortium, Benchmark Analytics uses peer-reviewed research-based design to build its EIS, First Sign®. Benchmark’s data scientists and engineers leverage the power of the world’s largest multi-jurisdictional officer performance database while incorporating iterative learning that uses cumulative analytics to get “smarter” and more efficient over time. This technology gives supervisors a more holistic picture of an officer’s performance, especially relative to others, and enables them to engage in more targeted and meaningful intervention.

Related Posts

Ready to Experience the Benchmark Difference?

Benchmark Analytics and its powerful suite of solutions can help you turn your agency’s challenges into opportunities. Get in touch with our expert team today.